About the Reading Group

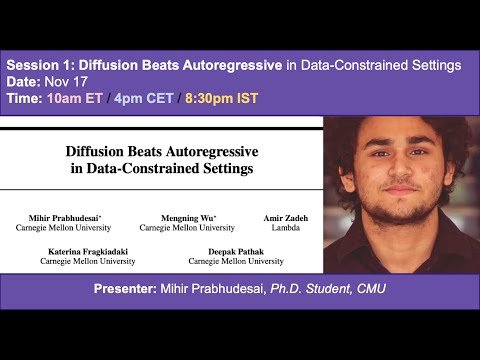

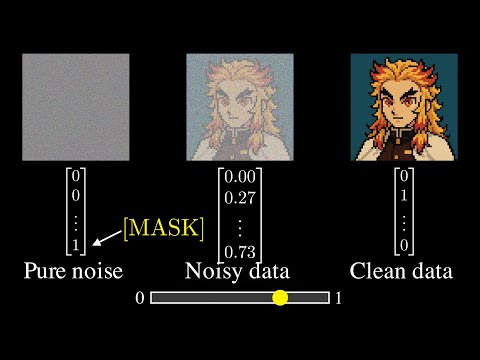

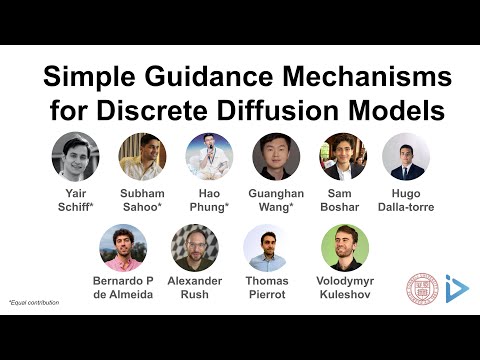

Diffusion LLMs are faster, more controllable successors to traditional LLMs and are rapidly gaining adoption. This reading group aims to build a community for exchanging and debating emerging ideas in this space. While our primary focus is discrete diffusion models for language, we also invite work that extends these methods to other modalities and applications—such as molecular design, drug discovery, and beyond. Each session features an author-led presentation followed by Q&A, with recordings shared on our YouTube channel.

Paper Discussions

Authors present their work followed by discussions and Q&A sessions

Recorded Sessions

All sessions are recorded and available on YouTube

Community

Stay informed through our email list and Twitter/X

Meet the Organizers

Subham Sahoo

Holds a Ph.D. from Cornell Tech, where he specialized in Diffusion Language Models. He has made foundational contributions to the field, with his work deployed at scale by Google, NVIDIA, and ByteDance across language generation and drug discovery.

Justin Deschenaux

PhD student in Machine Learning at EPFL, advised by Prof. Caglar Gulcehre. Previously interned at Apple MLR. His research interests include diffusion language models, fast generative models, and generalization.

Upcoming Session

November 24, 2025

PepTune: De Novo Generation of Therapeutic Peptides with Multi-Objective-Guided Discrete Diffusion

Sophia Tang and Pranam Chatterjee will explain why and demonstrate how discrete diffusion models enable more controllable generation.

Time: Nov 24 (Monday) · 10 AM ET / 4 PM CET

Prior knowledge: Fundamentals of discrete diffusion

Meeting link: click here

Paper: arXiv:2412.17780

Abstract: We present PepTune, a multi-objective discrete diffusion model for simultaneous generation and optimization of therapeutic peptide SMILES. Built on the Masked Discrete Language Model (MDLM) framework, PepTune ensures valid peptide structures with a novel bond-dependent masking schedule and invalid loss function. To guide the diffusion process, we introduce Monte Carlo Tree Guidance (MCTG), an inference-time multi-objective guidance algorithm that balances exploration and exploitation to iteratively refine Pareto-optimal sequences. MCTG integrates classifier-based rewards with search-tree expansion, overcoming gradient estimation challenges and data sparsity. Using PepTune, we generate diverse, chemically-modified peptides simultaneously optimized for multiple therapeutic properties, including target binding affinity, membrane permeability, solubility, hemolysis, and non-fouling for various disease-relevant targets. In total, our results demonstrate that MCTG for masked discrete diffusion is a powerful and modular approach for multi-objective sequence design in discrete state spaces.

Latest Sessions

View All Sessions

Latest Relevant Videos

View All Videos

Stay Updated

Join our community and never miss a session